How to build a CI/CD workflow with Skaffold for your application (Part III)

Using Gitlab, K8s, Kustomize and a Symfony PHP application with RoadRunner

🔥 This is third part (and last) of the series "Full CI/CD workflow with Skaffold for your application".

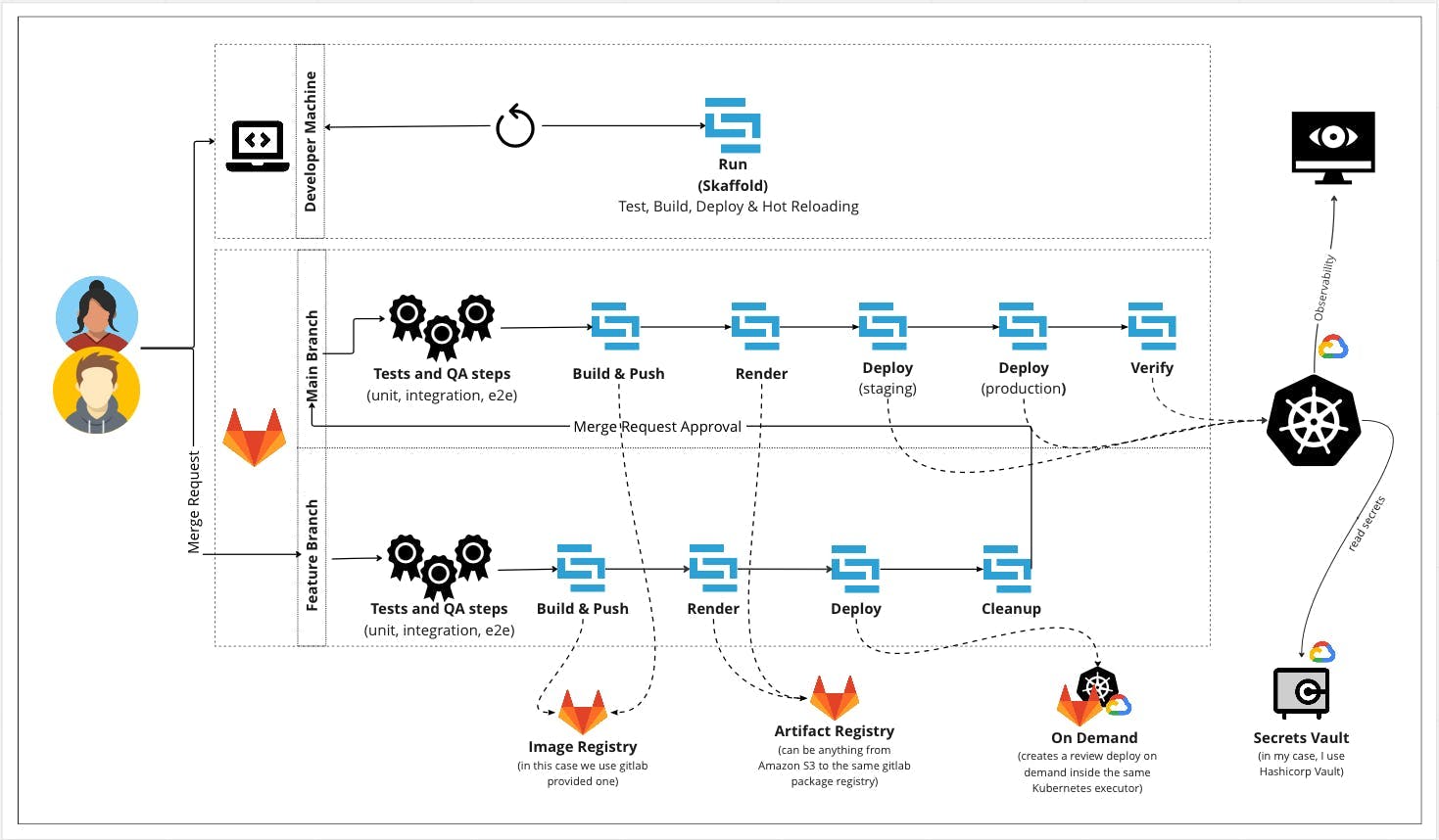

Let's recap: The Workflow

This is the workflow so far:

📣 You can check how to get to this point in the firsts two delivery of the series.

Gitlab K8s agent and Security

This main part in the integration of k8s and Gitlab with the Gitlab K8s Agent, is, in my experience, the best and easy way I find to integrate K8s with a DevOps platform like Gitlab.

Let's recap some steps

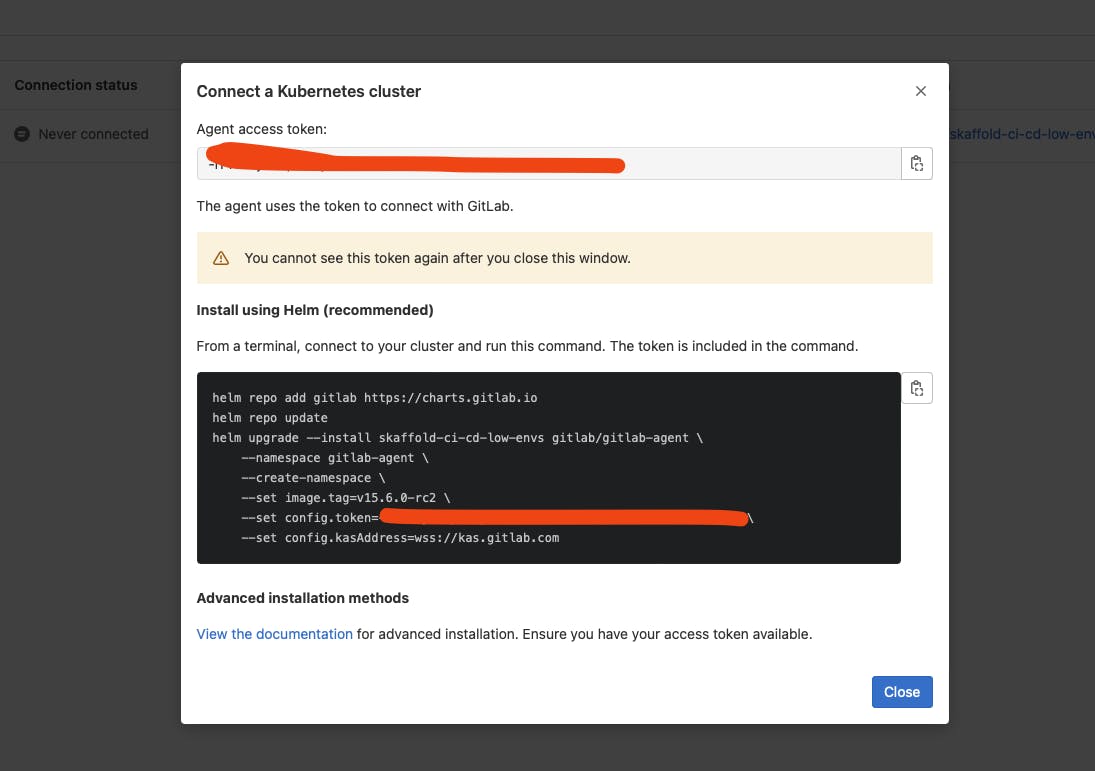

You need to add an Agent and them run a helm chart into your cluster to allow the secure communication between both.

the agent can be configured in 2 ways:

CI_ACCESS: Allow access from the project repository pipeline to the cluster and then you are in charge to manage how to deploy in the cluster.GITOPS_ACCESS: This allow a full gitops flow likeArgoCDfor example, updating your cluster in a pull based way in sync with the main branch of the repository.

In my case a use the first one CI_ACCESS since I want to manage in a more granular way, the whole process with skaffold, so mi configuration is way simpler

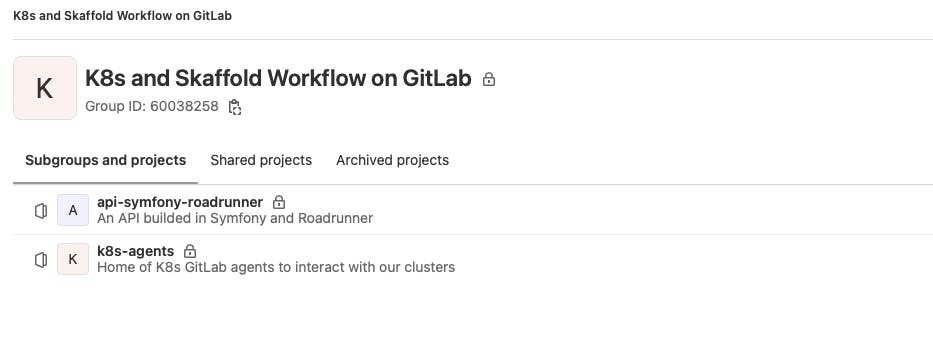

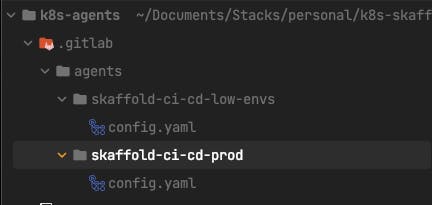

I have 2 repositories in a application group, one for the micro service itself and one for the agent (the agent also could be put in the micro service repository, but if you want more granular access or share the agent/cluster between applications of the same stack, this is the best way)

So in the K8s-agents repository, we only have the declarative config.yaml file for every agent that we want to create (for this example I have 2, one for lower-envs/runner and one for production since they are 2 different clusters)

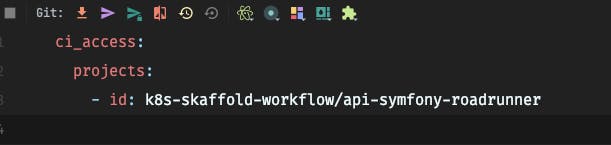

And in the config itself, I give access to all the projects that I want to use in the cluster.

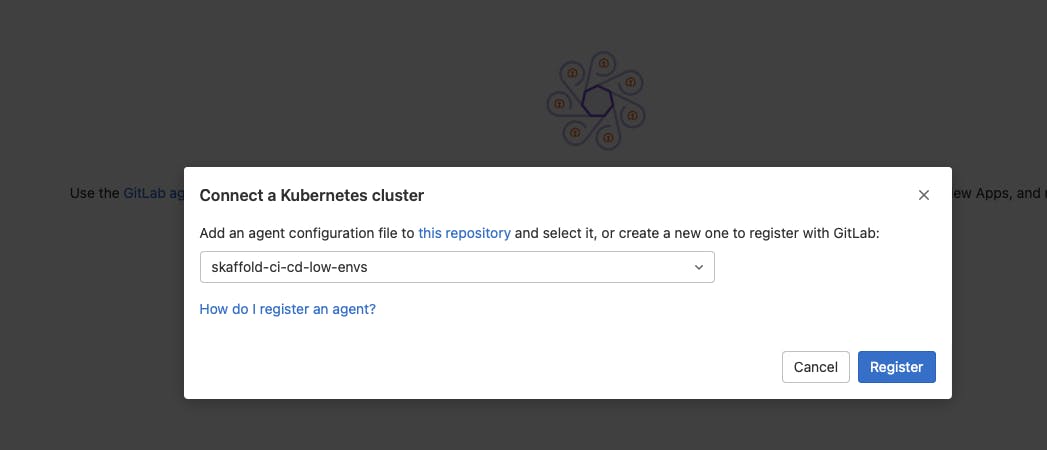

And last, bit no least, the need to link the agent with the cluster, for that, you should go to the k8s project, Kubernetes Cluster menu, and there you will see an interface where you will receive the instructions on how to link agent/cluster via a helm chart to be installed in the cluster.

After that, you cluster and gitlab instance are now linked, and you applications can use the cluster as Kubernetes-Executor runners and also for dynamic review environments (aka dynamic QA instances to say so)

Deployment and Safety Recommendations for K8s Agents

To restrict access to your cluster, you can use impersonation. To specify impersonations, use the access_as attribute in your Agent's configuration file and use K8s RBAC rules to manage impersonated account permissions.

You can impersonate:

The Agent itself (default) = The CI job that accesses the cluster

A specific user or system account defined within the cluster

Impersonation give some benefits in terms of security:

Allows you to leverage your K8s authorisation capabilities to limit the permissions of what can be done with the CI/CD tunnel on your running cluster

Lowers the risk of providing unlimited access to your K8s cluster with the CI/CD tunnel

Segments fine-grained permissions with the CI/CD tunnel at the project or group level

Controls permissions with the CI/CD tunnel at the username or service account

Provisioning cluster with terraform

As I said, the main goal of this tutorial is try to get the same tooling for local development, pipeline and deployment, but (always got a but), we have 2 sets of the terraform configuration instructions, of example for local development I want, as developer, to get as much of the observability tools that I have on production, in case I need to test metrics, build dashboards on grafana, etc, but without the complexity of production infrastructure architecture.

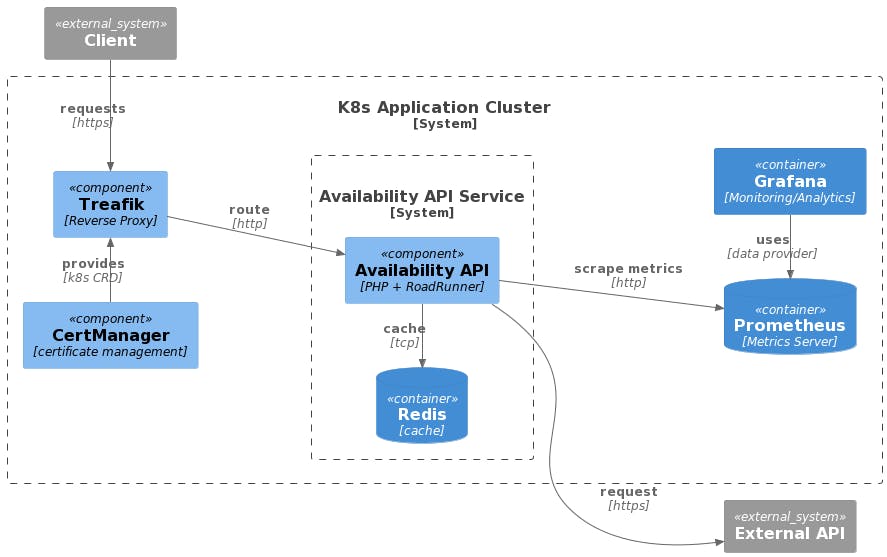

So in this case, we can give the application diagram for the first part here, as recap of 'how our local development stacklooks like, and how to achieved withterraform `.

So, for this application structure, i want to get in my local environment the cluster and it's 'pre-requisites' for my architecture, understood as pre-requisites all the others components inside the cluster that no belongs to the application itself (monitoring stack, traefik, cert-manager, etc).

So for that, I write simple modules to install that dependencies inside the local cluster, and get them available to use when a run my application locally with skaffold.

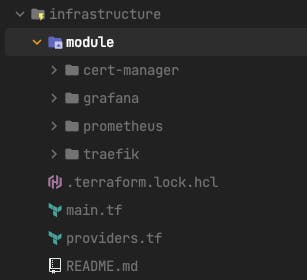

My structure for infrastructure folder looks like this:

Every module has inside their main.tf configuration file setting the desired state for my cluster after it's applied.

Lets take a look for one of this module (prometheus) main file:

terraform {

required_providers {

kubernetes = {

source = "hashicorp/kubernetes"

version = ">= 2.13.1"

}

helm = {

source = "hashicorp/helm"

version = ">= 2.7.0"

}

kubectl = {

source = "gavinbunney/kubectl"

version = ">= 1.14.0"

}

}

}

resource "kubernetes_namespace_v1" "monitoring_namespace" {

metadata {

name = var.monitoring_stack_namespace

}

}

resource "helm_release" "prometheus_stack" {

name = var.monitoring_stack_prometheus_name

repository = "https://prometheus-community.github.io/helm-charts"

chart = "prometheus"

version = var.monitoring_stack_prometheus_version_number

namespace = var.monitoring_stack_namespace

create_namespace = false

values = [

file("${path.module}/manifests/prometheus-override-values.yaml")

]

depends_on = [

kubernetes_namespace_v1.monitoring_namespace

]

}

resource "kubectl_manifest" "prometheus_stack_ingress" {

yaml_body = file("${path.module}/manifests/prometheus-ingress.yaml")

depends_on = [

helm_release.prometheus_stack

]

}

And then, in the root main.tf configuration file, you can wrap as many modules as you want, for my case, with my 4 modules was enough (prometheus, traefik, cert-manager, grafana)

module "cert_manager_stack" {

source = "./module/cert-manager"

}

module "traefik_stack" {

source = "./module/traefik"

depends_on = [

module.cert_manager_stack

]

}

module "prometheus_stack" {

source = "./module/prometheus"

depends_on = [

module.traefik_stack

]

}

module "grafana_stack" {

source = "./module/grafana"

depends_on = [

module.prometheus_stack

]

}

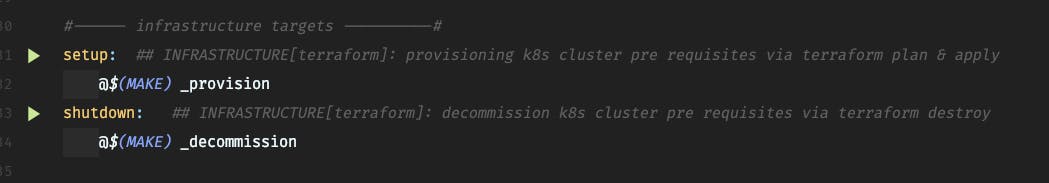

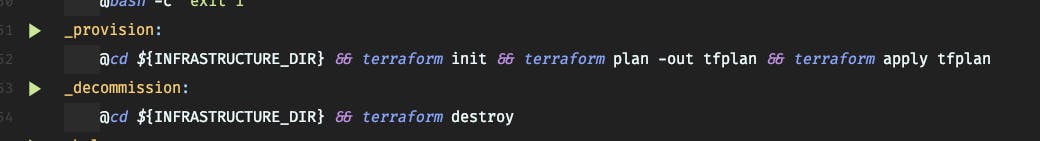

This also, has a handy target in our Makefile, allowing developers and operators, easily setup and remove cluster pre-requisites

Setup the pre-requisites took about 3 minutes, but if something that you need to do, time to time, you can setup your cluster today, work on your feature for some days, and then shutdown.

After all this, you will have a fully functional Local-To-Prod pipeline. (if you need to see how the Gitlab CI file looks like, is in the second part of this series)

Next

This is the las delivery of the series, but now I'll write about the others tools that i use to address different challenges in my day to day work.

If you are interested the next topics I'll write on are:

Managing database migrations at scale in

KubernetesforPHPapplication withsymfony/migrationscomponentIstio,Cert-ManagerandLet's Encrypt: Secured yourk8clusters communication with automated generation and provisioning of SSL CertificatesInternal Developer Platform: A modern way to run engineering teams.

The

Digital War Roomor how to get observability for Engineering Managers across applications and teams.

Support me

If you find this content interesting, please consider buying me a coffee :')